Test Post

Testing microformats in this theme, and testing mastodon syndication.

Testing microformats in this theme, and testing mastodon syndication.

Recently I was in the situation in which I needed to do some automation involving AWS-Vault, which is a tool for securely managing AWS access keys on developer workstations. Until now I had been using the file backend, which relies on encrypted-at-rest files to store the secrets.

Since there is obviously no caching involved here, every time I wanted to use a secret I would need to type in the passphrase to unlock it, with no cached copy of the passphrase involved. Coupled with a bug that my team faces when applying Terraform (sessions cause remote state S3 bucket reads to fail, worked around with the --no-session option), there was no way that I could create a loop for it.

For the last few days I’ve been spending time in El Rodadero, Colombia before setting off to Palomino for Hackerbeach. Another participant arrived today, and joined me in relaxing before the primary Hackerbeach work in Palomino. It’s peak season here in El Rodadero, which means all the restaurants, the malecón, and the beach are filled to the brim with people, mostly traveling Colombians who come here for what is justifiably one of the most gorgeous beaches in the country.

It’s been hell, doing a 4 flight stint for the better part of a day with no off-plane sleep. I read recently that airplane humidity is typically around 20%, which is drier than the Sahara desert. Lesson learned: always bring and fill your water bottle. When you’re dehydrated, the mucus membranes have trouble keeping a layer of mucus, and can let more dangerous external matter through. That results in a sensation, that, when you swallow, can be unpleasant.

When I initially received my new X1 Carbon I was very excited at the new hardware. More pixels, more lumens, more cores.

After I got over my initial euphoria, I noticed that some things didn’t work, and confirmed such by a very helpful Archwiki page.

Some of the things that didn’t work include ACPI suspend-to-RAM sleep (since corrected in a BIOS update), the the fingerprint sensor (still outstanding), and the WWAN LTE Cat9 card that Lenovo seems so proud about. It has all the LTE channels you could ever want and can achieve speeds of up to 150Mbit. It’s a pity that the Linux drivers are not up to snuff.

I’m looking forward to attending the 2018 IndieWebCamp. It’s a small 2-day event happening in Portland and is exploring the topics of independent web hosting and technologies to knit them together.

If you’re in Portland, you should attend too!

Press ‘c’ to see the presenter console for the slides.

Here is the presentation material for my talk entitled The Dark Arts of SSH. Please note this is a single HTML rendering that includes presenter’s notes.

As promised to my audience, here are the slides from my presentation titled Building your First Linux Kernel.

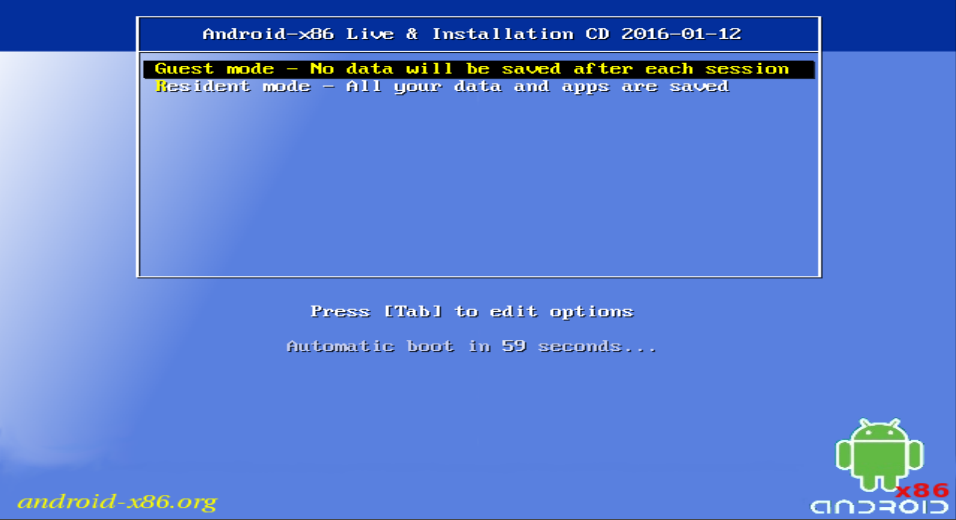

After initially running RemixOS, the new Android build for PCs, I decided that I would rather play with booting it natively from my SSD instead of from a USB device. Performance should be better, it would free my USB thumb drive up for other duties, and it would make booting more convenient.

This turned out to be a relatively simple operation. What follows is my methodology for doing that. Please note that these instructions assume you are running Linux.